Bounce rate is one of those web-metrics which have always baffled webmasters. Bounce rate determines the fate of a website, whether it will grow or not. Forums and comment boxes are filled with questions like "Is my bounce rate good enough?" or "Does bounce rate change according to website types" or "What should I do? My bounce rate is too high!!".

Just know this, Yes bounce rate changes as per the type of website you run, and there is nothing high-class Google wizardry in it, just simple logic. As you know websites are of different types, the bounce rate for any website is different as per the functionality. So an online web store will have a different bounce rate than that of a personal blog.

The following article is particularly useful if you doubt your bounce rate or if you are not sure whether your bounce rate is normal. Here is a complete range of bounce rates for different websites as per functions.

Online Shopping/Services Websites

You run a website which offers products and allows users to purchase the products online through a payment gateway.

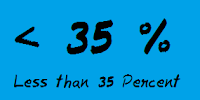

Such websites are highly popular with the world swarming up with a huge number of examples like amazon, ebay etc. An online shopping website should be having less than 35 % bounce rate. The obvious question is why such low value? The only logical answer is because of the huge number of steps in the transition. (For example, You select on a product to buy, you’ll be redirected to the webpage for selection/entry of address for shipping, then comes confirmation of order, then another webpage for the actual monetary transition where you will be directed to your credit card/bank’s website and then finally the confirmation of purchase). So bounce rate must be less than 35% for the website to prosper.

Such websites are highly popular with the world swarming up with a huge number of examples like amazon, ebay etc. An online shopping website should be having less than 35 % bounce rate. The obvious question is why such low value? The only logical answer is because of the huge number of steps in the transition. (For example, You select on a product to buy, you’ll be redirected to the webpage for selection/entry of address for shipping, then comes confirmation of order, then another webpage for the actual monetary transition where you will be directed to your credit card/bank’s website and then finally the confirmation of purchase). So bounce rate must be less than 35% for the website to prosper.Offline Shopping/Services Website

You run a website that offers a catalog of products but allows users to purchase only through offline methods like money order, VPP, telephone etc.

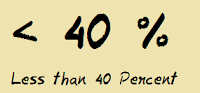

A new business would not directly plunge into online services. They would have a tendency to keep their business out of the web sphere. Also certain services involve huge transactions which are highly risky for the client and the provider to be allowed on the website. So such transactions need to be done on phone or through email correspondences. For example a housing developer’s website looking for people to invest Or a mattress specialist not ready to indulge in online trading etc. Such websites must have a bounce rate of less than 40%.

A new business would not directly plunge into online services. They would have a tendency to keep their business out of the web sphere. Also certain services involve huge transactions which are highly risky for the client and the provider to be allowed on the website. So such transactions need to be done on phone or through email correspondences. For example a housing developer’s website looking for people to invest Or a mattress specialist not ready to indulge in online trading etc. Such websites must have a bounce rate of less than 40%.Directories and online Encyclopedias

You run a website which functions like a dictionary where users can search and find information. You cannot expect to have a bounce rate of more than 20-25% for such type of websites. Since such websites have each and every thing a person needs or might need, he/she is not expected to leave the website for another one. So that explains such low bounce rate.

You run a website which functions like a dictionary where users can search and find information. You cannot expect to have a bounce rate of more than 20-25% for such type of websites. Since such websites have each and every thing a person needs or might need, he/she is not expected to leave the website for another one. So that explains such low bounce rate.Blogs

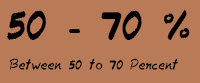

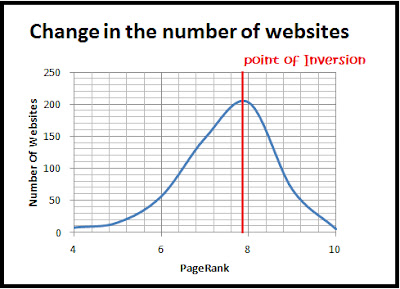

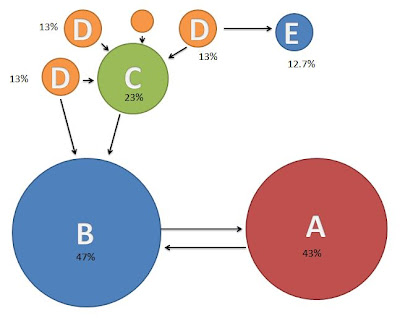

You do not run a website but a blog which is updated regularly with new posts. If you have a blog where you publish content from time to time, you are in for a surprise. Content based websites and webpages tend to have huge bounce rates in the likes of 50 to 70%. If you have it below 50%, you’re in a very safe zone and you need not worry, cause with such low bounce rate, your PageRank would be high too.

You do not run a website but a blog which is updated regularly with new posts. If you have a blog where you publish content from time to time, you are in for a surprise. Content based websites and webpages tend to have huge bounce rates in the likes of 50 to 70%. If you have it below 50%, you’re in a very safe zone and you need not worry, cause with such low bounce rate, your PageRank would be high too.Video Blogs or PodCasts

Though most people assume both are the same, one must understand that those are two different things. A video blog is a conventional blog on popular platforms like blogger or wordpress with video(s) hosted on it. A podcast on the other hand is a page with actually hosts videos only (like you-tube). You run a YouTube page or a website which hosts either video or music or similar media.

Websites which host videos/music or similar media, bounce rate tends to be very low. As one the user lands on the page, there are a lot of entertainment/infotainment options available to the users. Also the user is on the page for a lot of time which opens him up for new options. So a bounce rate of 20 to 30% is not a value to be raised an eyebrow on.

Websites which host videos/music or similar media, bounce rate tends to be very low. As one the user lands on the page, there are a lot of entertainment/infotainment options available to the users. Also the user is on the page for a lot of time which opens him up for new options. So a bounce rate of 20 to 30% is not a value to be raised an eyebrow on.Some exceptions

If you have very high bounce rate i.e. near 90 to 100%, do not lose heart as there are some exceptions to the above mentioned rules.

- A website with only 1-5 pages, will have a bounce rate near or above 90%. This is because; a website with low number of pages will not provide any incentive to the visitor to visit other pages. For example, a website with a single web page will have a bounce rate of 100%. So it is not a bad thing if your low webpage website has unbelievably high bounce rate.

- New websites/blogs need not worry, as their bounce rate will stabilize in a few weeks. For new Blogs there is a problem, your bounce rate will not decrease unless you have a decent number of blog posts which users might visit after the landing page.

- Certain Blogs also tend to have a bounce rate of near 70 to 80%. This depends on the number of articles already present on the blog and the amount of time the blog has existed in the web sphere. This is because; readers come to the website in search for information for a particular topic and leave when satisfied. So bounce rate tends to be high. For such situations, I would recommend using the related posts technique, where links to related articles is displayed at the end of the article.

Where You Went Wrong

After reading this, if you still think that your bounce rate is on the higher side, There might be reasons behind it. I have listed down a few of them, comment if you know another.- You are lazy, (either a potato blogger or a lazy one)

- You are not posting recently (Learn what happens when a daily post blog is left untouched for a week)

- Not giving enough attention to the content of your website or the steps that come after good content

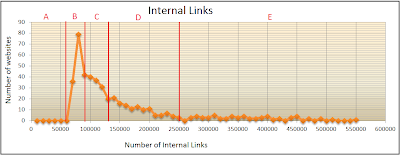

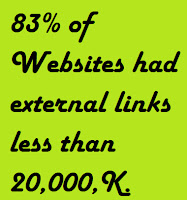

- Lack of External and Internal Links

- Higher Page load time which might be, so high that your users leave before your website loads.

- You have not read the article on 4 sureshot ways to improve your bounce rate.

This must have answered your question

.png)

.png)